· 3 min read

Cerebras WSE-3: Ambitious AI Processing or a Niche Solution?

Cerebras' WSE-3 promises to revolutionize AI hardware with its wafer-scale design, offering unmatched performance for large AI models. However, skepticism remains about its real-world impact, particularly against NVIDIA's entrenched GPU dominance.

Cerebras WSE-3: Revolutionizing AI Processing or an Ambitious Promise?

In the rapidly evolving field of artificial intelligence (AI), hardware plays a crucial role in pushing the boundaries of what is possible. Over the past decade, NVIDIA has emerged as the dominant force with its GPU architecture, which has provided the computing power necessary for large-scale AI models. However, a new contender, Cerebras Systems, is generating significant buzz with its innovative Wafer-Scale Engine 3 (WSE-3), an AI-specific processor unlike anything seen in the industry. But while Cerebras is making bold promises, it’s important to assess both the potential and the realities of the WSE-3, especially when comparing it to established players like NVIDIA.

Cerebras’ Wafer-Scale Engine: An Overview

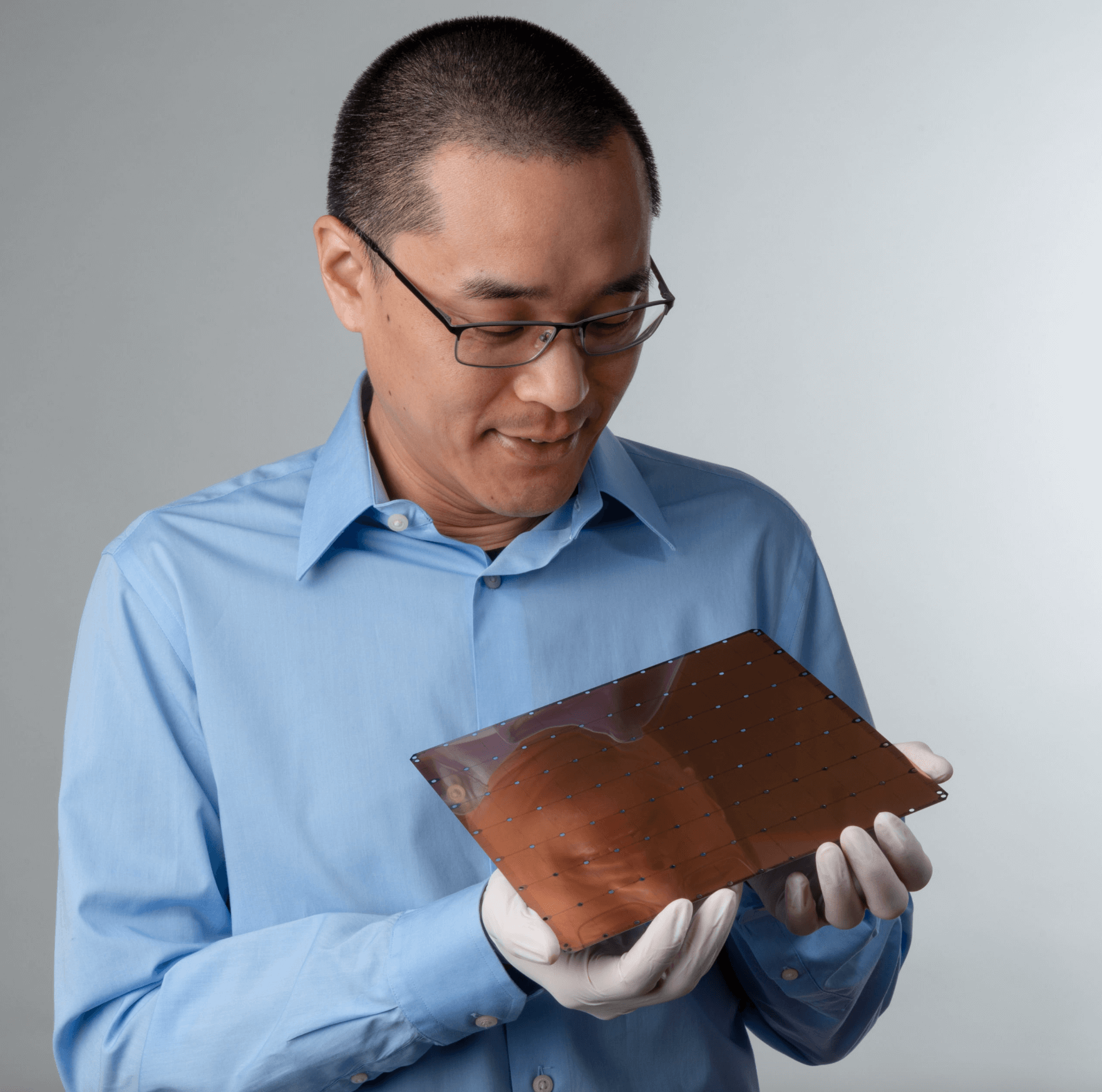

Founded in 2016, Cerebras has always taken a different approach from the rest of the AI hardware field. While companies like NVIDIA adapted GPUs—originally designed for gaming—to accelerate AI, Cerebras opted for a radical shift. Their Wafer-Scale Engine (WSE) is a specialized processor designed solely for AI workloads. Instead of creating small, chip-sized processors like NVIDIA’s GPUs, Cerebras made the WSE a single piece of silicon covering an entire 12-inch wafer.

- The WSE-1 (2019): 1.2 trillion transistors and 400,000 processing cores.

- The WSE-2 (2021): 2.6 trillion transistors and 850,000 cores.

- Now, the WSE-3 aims to surpass them with 3 trillion transistors and 1 million cores.

Cerebras claims this scale can deliver unparalleled AI performance, particularly for large models like GPT-3 and DALL-E.

What the WSE-3 Promises

Cerebras positions the WSE-3 as the ultimate solution for high-performance AI processing, designed to address limitations faced by traditional processors:

- Memory Bandwidth and Latency: WSE-3’s integrated on-wafer memory minimizes data movement bottlenecks for training and inference.

- Scalability: The single-wafer architecture claims to handle far larger models without the need for complex distributed systems.

- Energy Efficiency: Tight integration could lead to efficiency gains over GPU clusters, reducing overall power consumption.

- AI-Specific Design: Built exclusively for neural networks, the WSE-3 could outperform multi-use hardware like NVIDIA’s H100 and A100 GPUs in certain scenarios.

A Parallel with NVIDIA and the GPU Industry

NVIDIA’s dominance in the AI hardware market is built on a strong ecosystem, from powerful hardware to software stacks like CUDA. Products like the H100 GPU leverage AI-focused advancements, while still maintaining versatility for other tasks. NVIDIA’s GPUs have proven to be reliable and scalable, handling diverse workloads with consistent performance.

In contrast, Cerebras’ WSE-3 is purely designed for AI tasks. While this focus could yield performance benefits, it also limits flexibility outside of AI, potentially restricting adoption. Furthermore, the WSE-3 is still not widely available, making real-world performance data scarce compared to NVIDIA’s established benchmarks.

The Cautious Optimism Surrounding WSE-3

While Cerebras’ WSE-3 shows immense potential, several key challenges remain:

- Market Adoption: NVIDIA’s ecosystem is entrenched, and the transition to a new architecture could require significant investment.

- Software Compatibility: NVIDIA’s CUDA software stack has widespread use; Cerebras’ ecosystem may require developers to re-engineer models.

- Reliability: Wafer-scale integration faces technical complexities like yield management and defect tolerance.

- Cost: High costs may limit WSE-3’s adoption, particularly if it cannot demonstrate clear cost-performance advantages over GPUs.

Final Thoughts: Revolution or Niche?

Cerebras’ WSE-3 represents a bold vision of AI hardware, with unprecedented scale and performance potential. If it lives up to its promises, it could disrupt the AI hardware landscape by offering revolutionary processing power for deep learning models.

However, disrupting the NVIDIA-dominated GPU market will not be easy. NVIDIA continues to innovate, and its GPUs remain the go-to choice for AI researchers and industries. While Cerebras offers a glimpse into the future of specialized AI hardware, it remains to be seen whether the WSE-3 will reach its full potential in practice.