· 2 min read

Gradient Network: Decentralized Edge Compute for AI

Gradient Network is a decentralized compute platform leveraging the Solana blockchain to bring compute power to the edge, closer to users and data sources. This network addresses the limitations of traditional cloud infrastructures—like latency, cost, and data privacy—by enabling seamless, low-latency AI processing and other compute-intensive tasks at the edge. With key products like the Gradient Sentry Node, users can contribute unused compute power and earn rewards, creating a global, collaborative ecosystem. Ready to join the decentralized future?

Gradient Network redefines decentralized computing by leveraging the Solana blockchain to offer edge-based resources, challenging traditional cloud infrastructures. With the exponential growth of AI, centralized systems face limitations in cost, latency, and data privacy. Gradient Network aims to create a more accessible, affordable, and user-centric solution, where compute resources operate closer to the data—at the edge.

🚀 Vision: Decentralization Meets Edge Compute

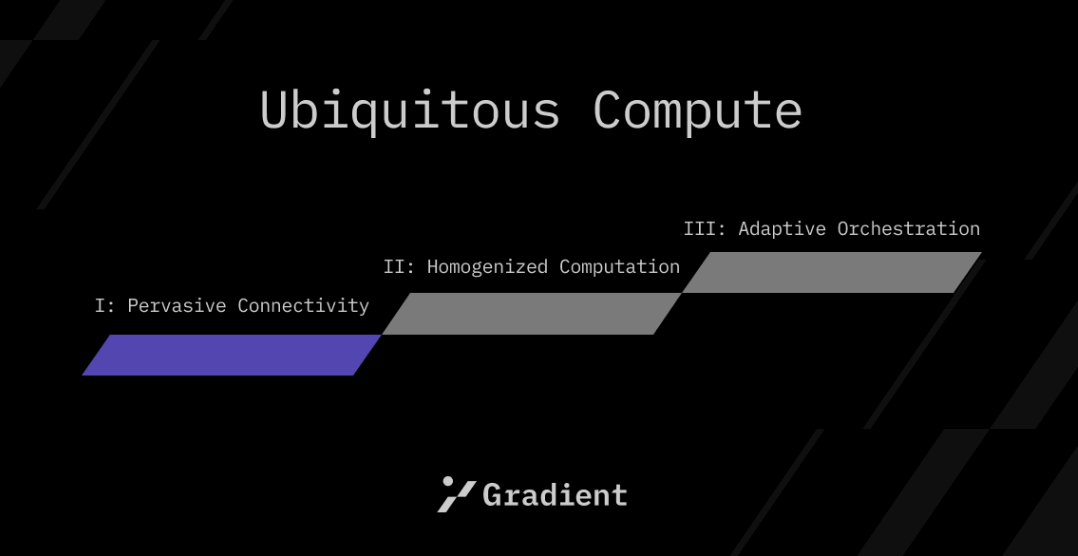

Gradient Network’s vision is built on three core pillars:

1️⃣ Pervasive Connectivity

Enabling seamless communication between edge devices to reduce latency, enhance robustness, and safeguard data privacy.

2️⃣ Homogenized Computation

Creating a standardized environment for computation to occur anywhere—agnostic to location or hardware—ensuring universal accessibility.

3️⃣ Adaptive Orchestration

Intelligent orchestration of resources, dynamically allocated based on real-time demand and availability, for optimized efficiency.

💡 Use Cases: Empowering AI, Content Delivery, and Serverless Functions

Gradient Network’s edge compute model supports:

AI Inference: AI models, including large language and image recognition systems, require heavy compute power. Edge computing allows these models to operate closer to users, reducing latency and improving inference speed.

Content Delivery: Decentralizing compute power enables faster load times and reliable content availability.

Serverless Functions: Supports event-driven functions that run without server management, seamlessly fitting into a decentralized infrastructure.

🛠️ Key Product: Gradient Sentry Node

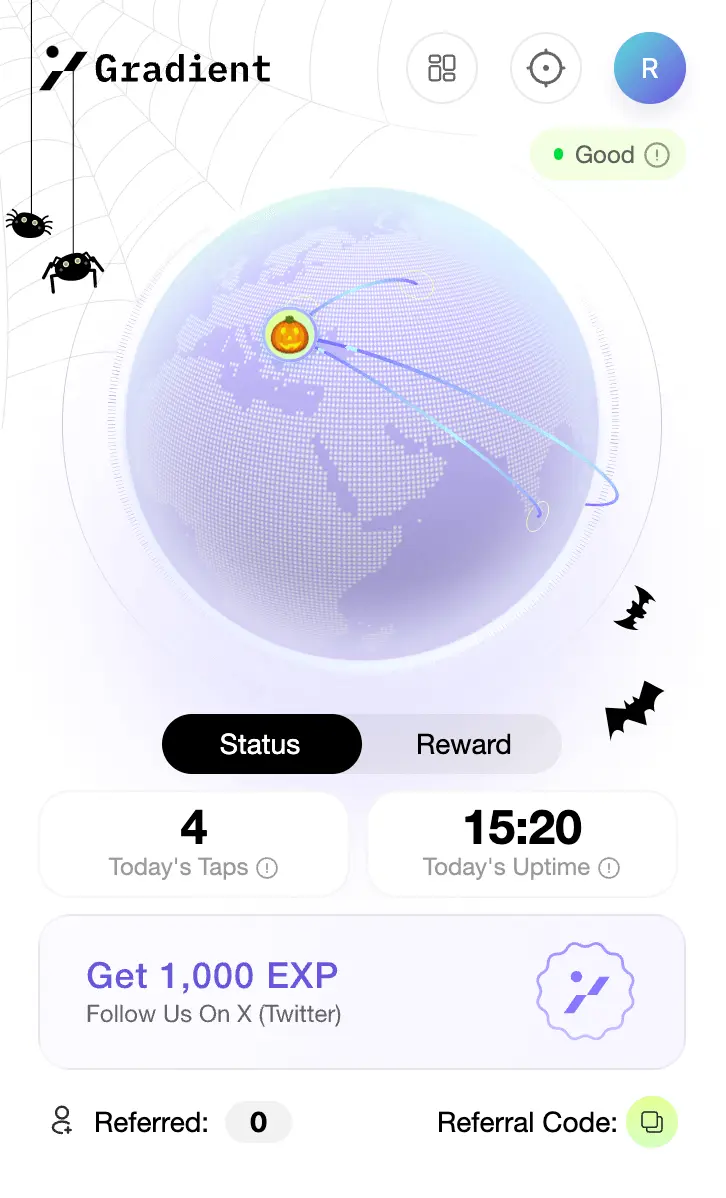

Gradient Sentry is Gradient’s first product and is available in open beta. It allows users to join the network by contributing unused compute power to support decentralized workloads. Contributors are rewarded for their participation, fostering an incentivized and collaborative ecosystem.

Key Highlights:

- Mapping global connectivity: Sentry Nodes help monitor global connectivity, stabilizing the decentralized network.

- Powering edge computing: Sentry Nodes bring compute resources closer to users, critical for low-latency applications.

Gradient Sentry Node extension as seen on Chrome

🌍 AI and Edge Compute in Decentralized Networks

As AI applications demand high-throughput, low-latency systems, edge computing becomes essential. Decentralized systems like Gradient Network leverage edge resources, supporting AI workloads in a sustainable, equitable way. This approach empowers a broader ecosystem of contributors, driving a future of AI where resources are globally distributed and universally accessible.

🌐 Learn More: Dive into Gradient Network’s official documentation to explore more about its mission and technology.

🔗 Get Involved: Join the Gradient Network and contribute your unused compute power today!