· 2 min read

The Importance of PCIe Lanes Between GPU, Motherboard, and CPU in the AI Era

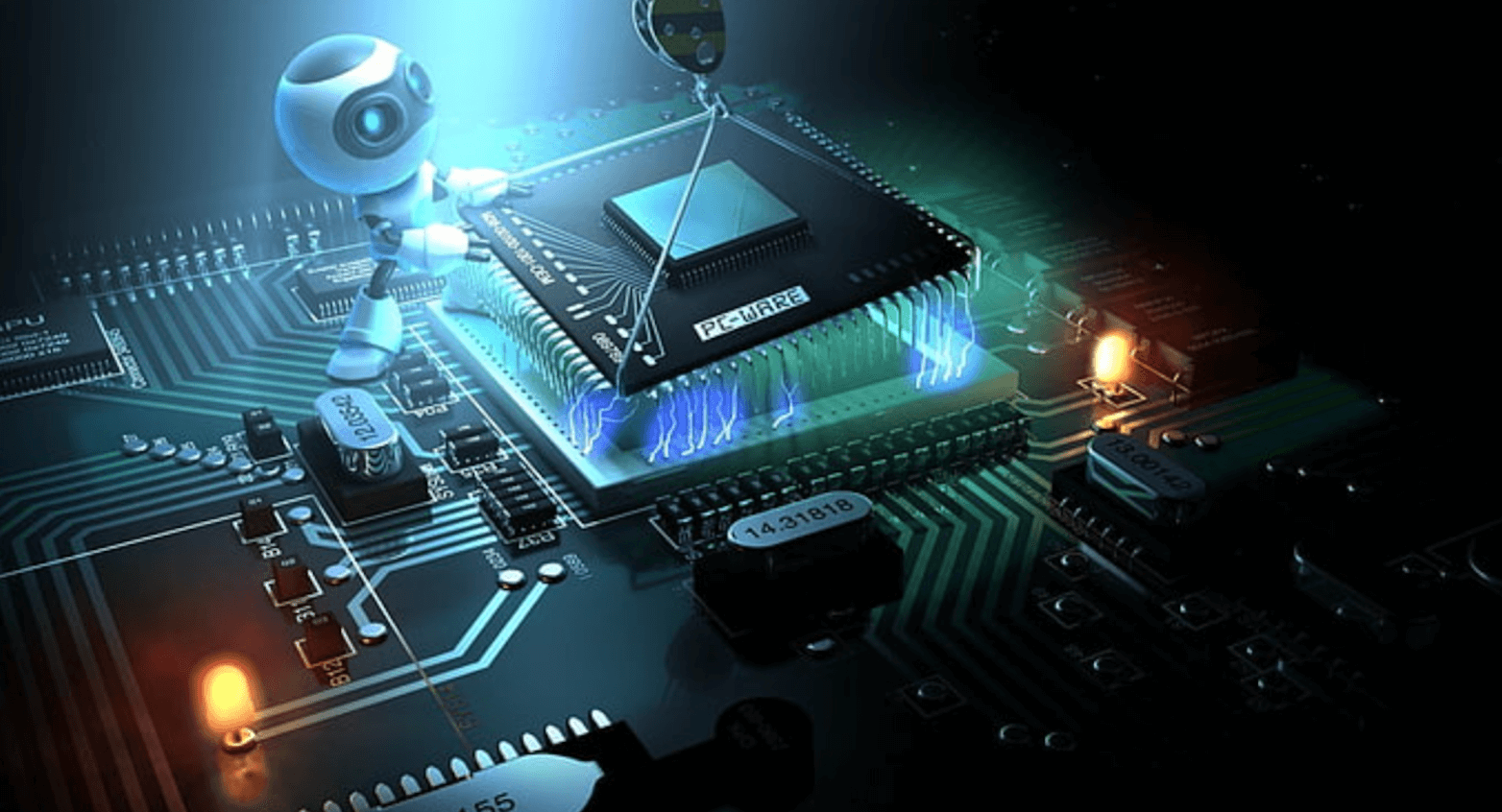

In the AI era, optimizing hardware isn't just about powerful CPUs and GPUs—it’s about ensuring efficient communication between them. PCIe lanes, the data highways between the CPU, GPU, and motherboard, play a critical role in AI tasks like deep learning and model inference. Adequate PCIe bandwidth allows for faster data transfers, multi-GPU support, and real-time processing, making lane allocation essential for high-performance AI systems. As PCIe 5.0 emerges, preparing your hardware for increased bandwidth will future-proof your system for next-gen AI workloads.

The Importance of PCIe Lanes Between GPU, Motherboard, and CPU in the AI Era

As AI continues to drive advancements in fields like deep learning, neural network training, and data processing, hardware optimization becomes critical for maximizing performance. While GPUs and CPUs often get the spotlight, the role of PCIe lanes—the data pathways connecting these components to the motherboard—is just as essential.

What Are PCIe Lanes?

PCIe lanes act as high-speed data highways between the CPU, GPU, and other components. More lanes mean faster data transfer, enabling efficient communication between these critical parts. In AI tasks, where huge datasets are processed rapidly, sufficient PCIe bandwidth is crucial to avoid performance bottlenecks.

Why PCIe Lanes Matter for AI

High Data Throughput

AI model training requires fast data transfers between the CPU and GPU. More PCIe lanes allow for quicker, more efficient data flow, which is essential for large-scale computations.Multi-GPU Systems

AI workloads often use multiple GPUs for parallel processing. Each GPU needs sufficient lanes to function optimally, and inadequate bandwidth can slow down performance.Faster Inference

Real-time AI applications rely on quick inference times. Efficient PCIe lanes ensure faster data processing, crucial for tasks like autonomous driving or medical imaging.High-Performance Computing

Complex AI models require HPC systems with multiple CPUs and GPUs. PCIe lanes act as the backbone, and without enough lanes, even powerful components can become bottlenecked.

Preparing for the Future

As PCIe standards evolve, with PCIe 5.0 on the horizon, upgrading your hardware to support more lanes will ensure your system is ready to handle next-gen AI workloads. Selecting the right motherboard, CPU, and ensuring proper lane allocation will be key to achieving the best performance for AI applications.

Optimizing your hardware for PCIe lanes is not just about buying the best GPU or CPU—it’s about ensuring seamless communication between all your components, which is critical for success in AI-driven tasks.